There are 3 types of media

Common resolutions for image are HD(1920 X 1080), 4K (4096 X 2160)

Aspect ratio is ratio of width to height, common values are 4:3 and 16:9

Frequency is the number of audio samples per sec. Example 48 kHz is 48,000 audio samples per sec.

Channel is a single sequence of audio samples,

Mono: Single channel

Stereo: 2 channels

5.1 : 5 channels

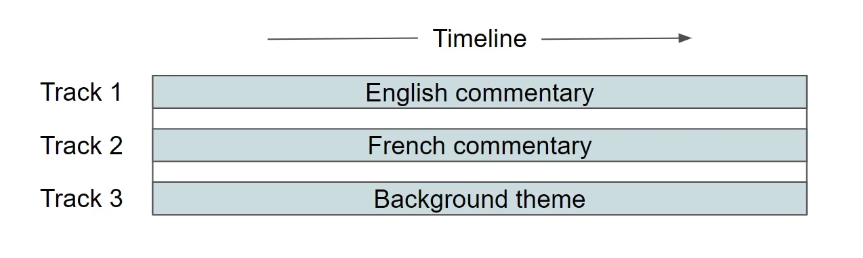

Audio track is a collection of channels.

A media file can contain multiple audio tracks. Different tracks are organized to play on same timeline and user can switch on/off certain tracks.

Video is a sequence of images, and each image is called a frame, Frame rate is the how many frames are played in a media file per second. Common values for frame rates are 25 and 29.97 fps

Video Compression is achieved by 2 mechanisms

Spatial redundancy: In a frame nearby area of a pixel are not varying by much and can be encoded using fewer bits and in used in image file compression as well since a frame is a image file

Temporal redundancy: In consecutive frames, the pixel is not varying by much and next frame can be optimized during storage.

Codec

Codec comes from joining the two words, Coder + Decoder

Raw images and video files are very big and impractical to store and transfer

- H.264, is the most widely used codec

- H.265 is the the successor and provides better compression

- VP9 is from google

- Prores by Apple

Audio codecs:

- PCM, is uncompressed

- AAC, MP3 are compressed codecs

Container

Wrapper or package for media, It tells how the data is organized in the file, like a index of items in the file.

Audio/video containers: MP4. MXF (camera format), QT/MOV

Audio containers: wav, m4a

Transcoding

Going from one codec to other

Some codecs are better in maintaining quality, others have better compression, there are consuming application limitations which decide which codec can be used. Then we have frame rate conversion to support different regions, for network consumption we need to be aware of bit rate conversion, or we may want to add an overlay.

Transmuxing

It is simple re-pakaging from one container to other container, to support stream playback features mentioned below

Audio transcoding is used to mix channels and to normalize volume.

Streaming playback

Some of the desired features when the playback of a video file is initiated are

- Instant playback without downloading full file

- Seekable – file should be playable from anywhere in the middle of the file and not download the file upto that point.

- Adaptive bitrate and resolution – on network bandwidth change or window resize, video should switch to correct resolution / bitrate file to help in better experience and save network traffic.

Streaming protocols

RTMP – (Real time messaging protocol) was created by macromedia flash – no support for modern codecs like HEVC, but is popular on ingest side because of low latency support

HTTP – Playback does not need separate streaming server, HTML5 and MSE support on browsers, to support modern adaptive streaming for HLS and MPEG-DASH

SRT – Secure reliable transport – is a new UDP based and low latency protocol. It has error correction mechanism. Its a good candidate for ingest.

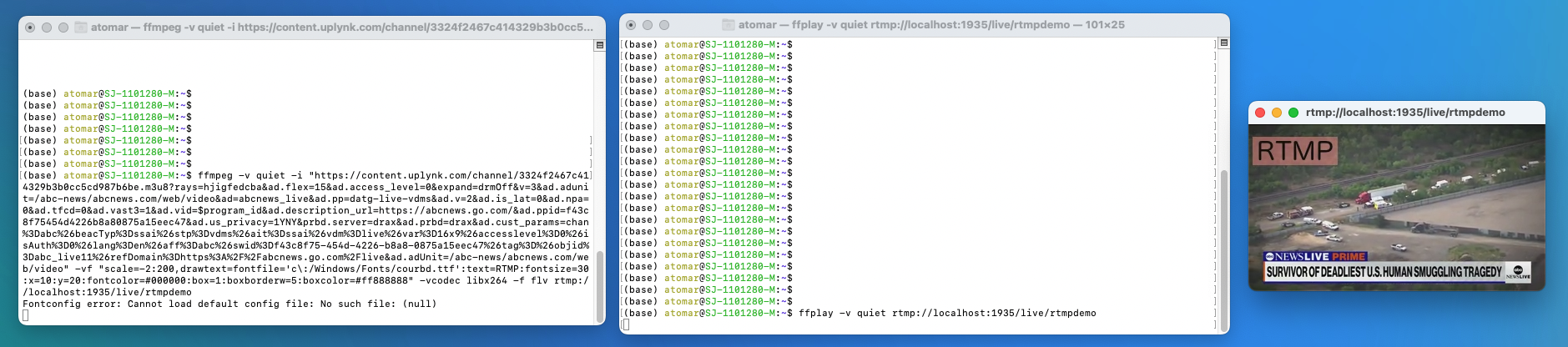

Demo

Here is a simple demo to take a live stream from ABC news, upload it to a local RTMP server and read the stream with ffplay and show it to user.

The first command is pulling a stream from live news, and uploading it to RTMP server after adding a overlay on it

The second command is pulling the stream from the RTMP server and playing it

Adaptive Streaming

HLS (HTTP live streaming), from Apple, it supports H.264/AVC and H.265/HEVC, it uses a TS (transport stream) container, and have also added support for fMP4 recently, it has its playlist in m3u8 file extension, (Essentially has m3u8 and ts files)

DASH (Dynamic Adaptive streaming over HTTP), It came out of a collaborative standard. It is Codec agnostic so it supports all H.264/ H.265 / VP9, it internally supports fMP4 (fragmented mp4) container, has a xml playlist in mpd extension (Essentially has mpd and m4s (fragmented mp4) files)

Cheers – Amit Tomar