Speech-to-text technology converts spoken language into written text using automated speech recognition (ASR). It is widely used for applications such as voice assistants, transcription services, real-time captions, and hands-free communication.

This technology relies on machine learning, natural language processing (NLP), and deep learning algorithms to improve accuracy by understanding accents, dialects, and contextual cues. It is beneficial for accessibility, productivity, and user convenience across various industries, including healthcare, customer service, and education.

Fast Fourier transform

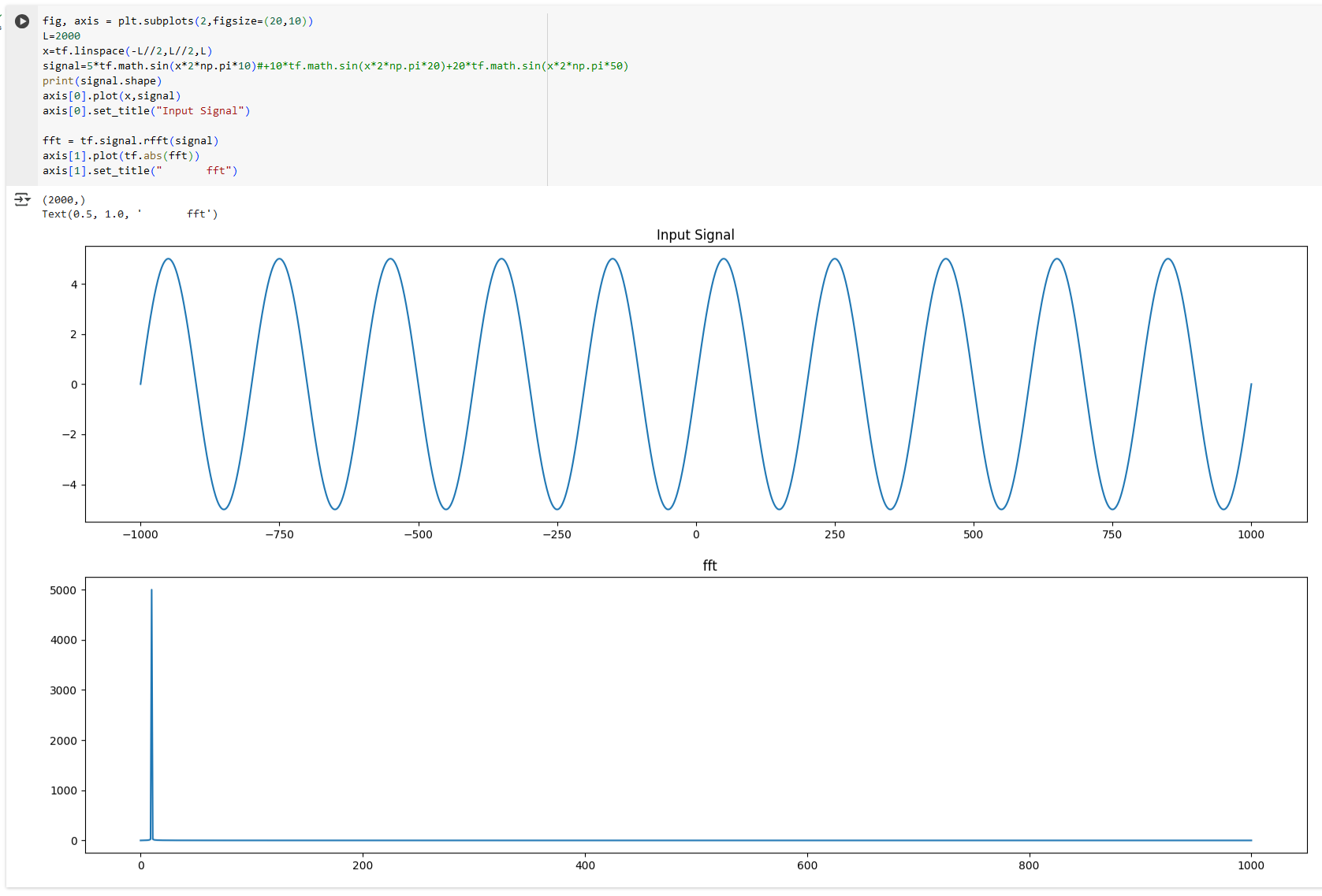

Before we dive into speech analysis, lets understand what is FFT. An Audio signal is composed of multiple sine waves with different amplitude (intensity). The FFT algorithm extracts out the individual sine / cosine waves and their intensity which join together to build out the segment of audio wave.

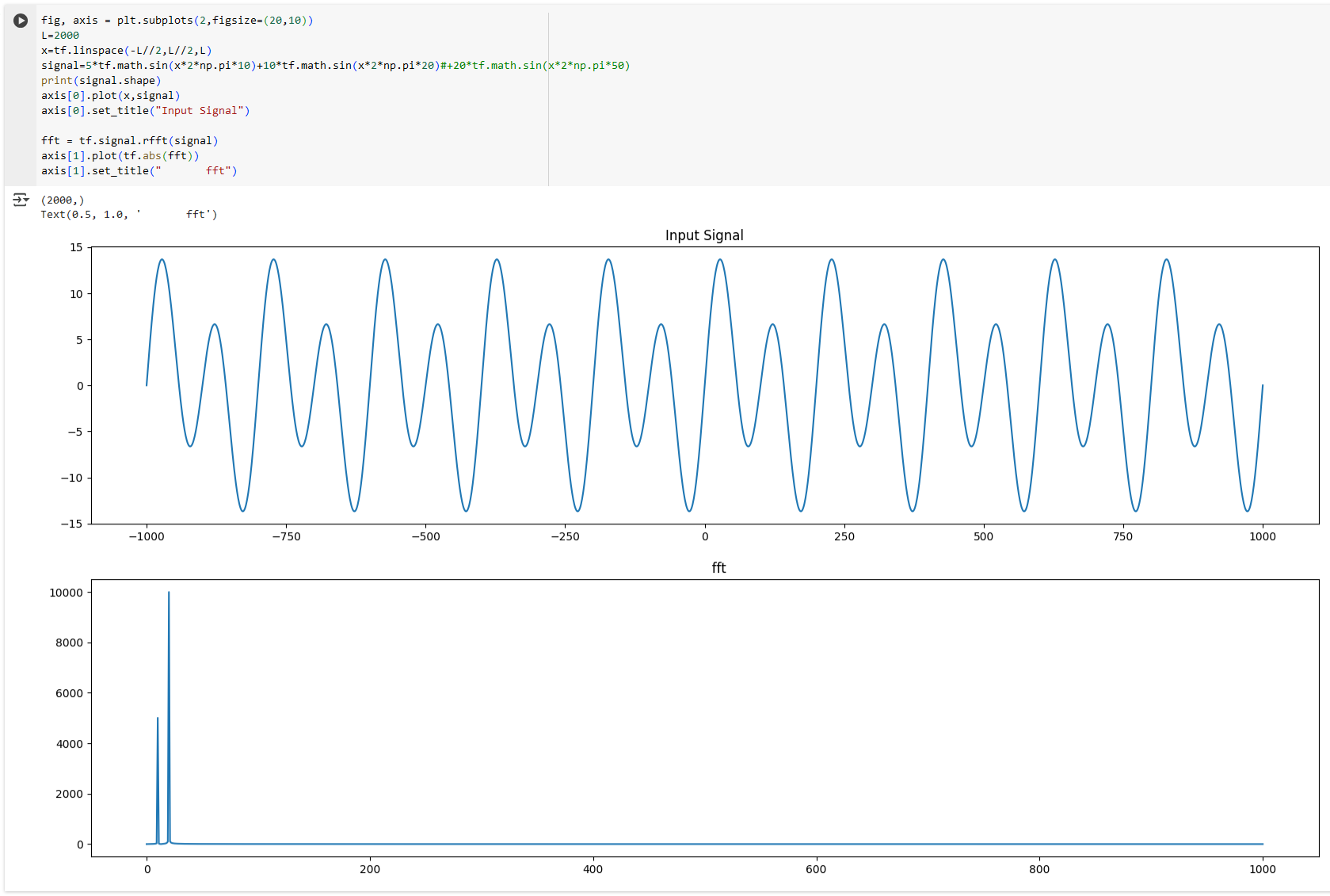

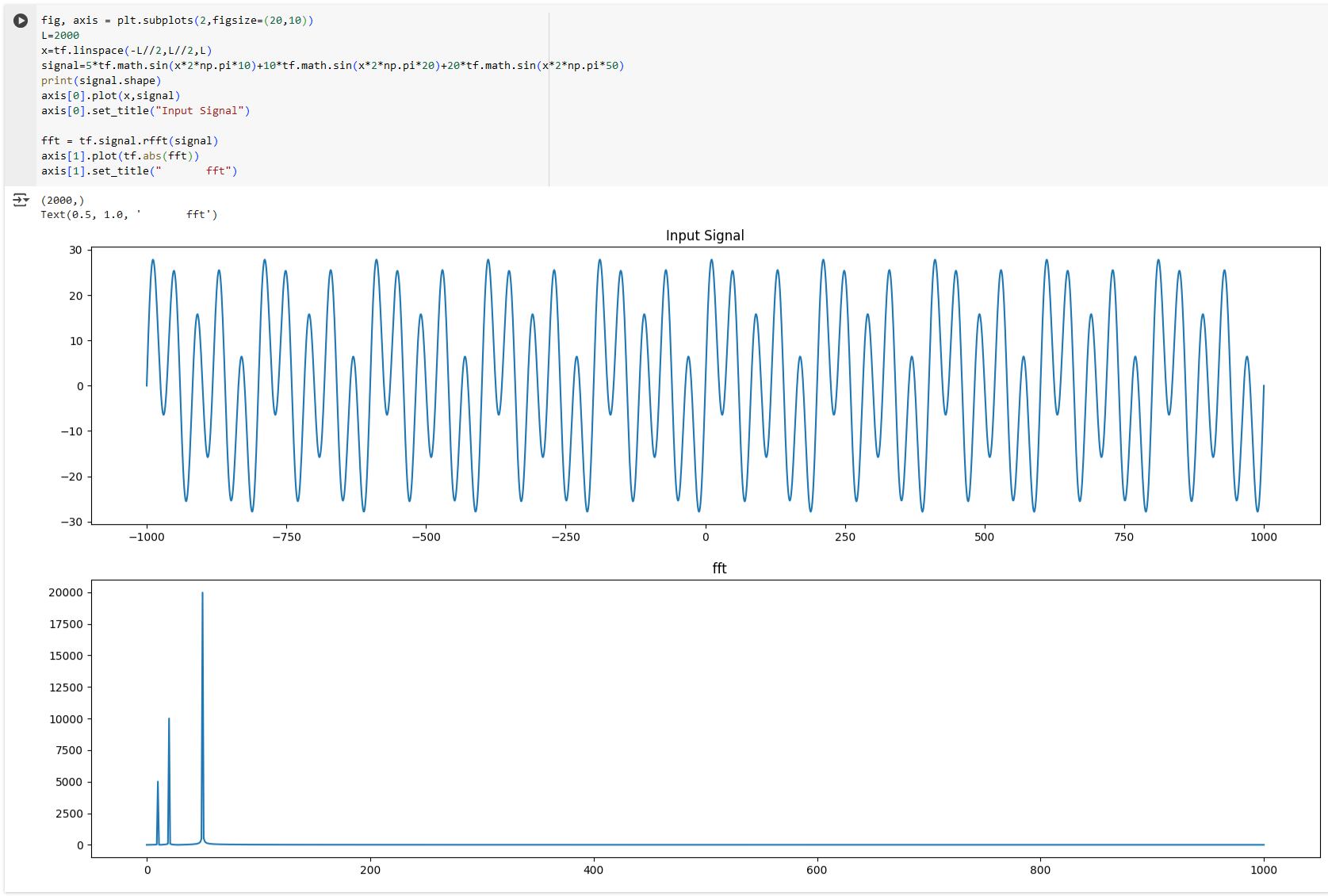

In below examples, we show how different sine waves are represented in FFT representation.

Sine wave -> 5tf.math.sin(x2np.pi10)

Sine wave -> 5tf.math.sin(x2np.pi10)+10tf.math.sin(x2np.pi20)

Sine wave -> 5tf.math.sin(x2np.pi10)+10tf.math.sin(x2np.pi20)+20tf.math.sin(x2np.pi50)

Now imagine these waves are getting repeated every 1 second.

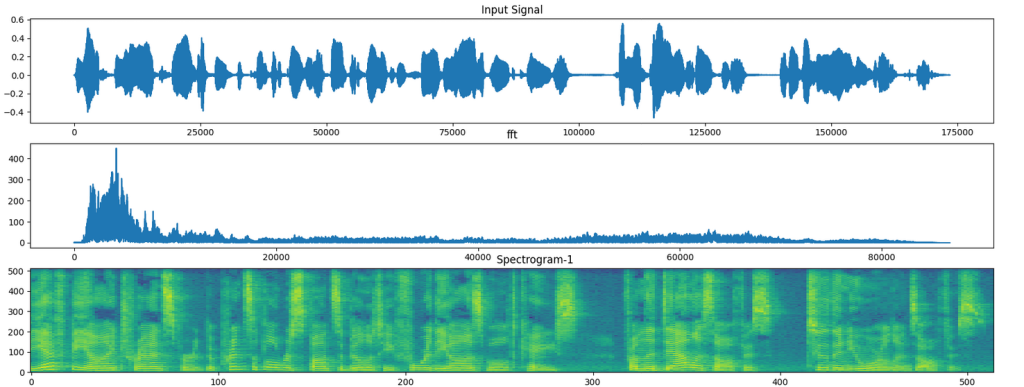

The wave will look like

We take 1 second window and extract the FFT for that window

Then we rotate it 90 degree and join them together. This is called a Spectrogram.

For a full picture, a complex audio wave and its spectrogram looks like below.

One step in the speech recognition is to identify a sound and map it to text. The Spectrogram is used to extract the frequency which corresponds to the alphabets.

Connectionist Temporal Classification (CTC)

The next step is to join all these alphabets and make a word from it. When people speak they emphasize different parts of the word, resulting in the same word coming out of this spectrogram as different combinations as the word “Good” may come out as Ggodd or Godd or Goooodd.

CTC solves the problem by allowing flexible alignment between input and output sequences.

It introduces a special blank token (-), which allows the model to skip frames where no phoneme or character needs to be emitted.

Example possible outputs for "CAT":

C - A - T

C C A T T

- C A T -All of these are valid and will be collapsed into “CAT”.

Consecutive repeated characters are merged (e.g., “CCAAAT” → “CAT”).

Blanks (-) are removed (e.g., “C-A–T” → “CAT”).

CTC computes the probability of all possible alignments and maximizes the likelihood of the correct output sequence. This eliminates the need for manual alignment between input frames and output labels.

Putting it together

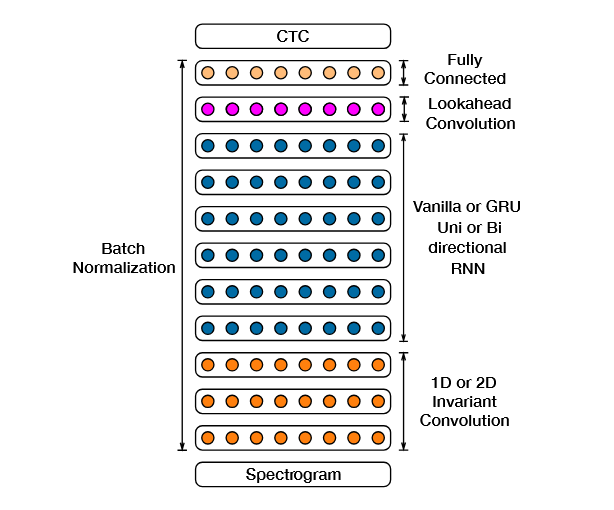

The paper Deep Speech 2 : End-to-End Speech Recognition in English and Mandarin shows how to take a spectrogram and pass it through the neural network to provide a input to the CTC function

Model preparation

input_spectrogram=Input((None,129,1), name="input")

x=normalization(input_spectrogram)

x=Conv2D(32,kernel_size=[11,41],strides=[2,2],padding='same',activation='relu')(x)

x=LayerNormalization()(x)

x=Conv2D(64,kernel_size=[11,21],strides=[1,2],padding='same',activation='relu')(x)

x=LayerNormalization()(x)

x=Reshape((-1, x.shape[-2] * x.shape[-1]))(x)

x=Bidirectional(GRU(128,return_sequences=True))(x)

x=Bidirectional(GRU(128,return_sequences=True))(x)

x=Bidirectional(GRU(128,return_sequences=True))(x)

output=Dense(len(vocabulary)+1, activation="softmax")(x)

model = tf.keras.Model(input_spectrogram, output, name="DeepSpeech_2")

model.summary()Model: "DeepSpeech_2"

________________________________________________________________________________

Layer (type) Output Shape Param #

================================================================================

input (InputLayer) [(None, None, 129, 1)] 0

normalization (Normalization) (None, None, 129, 1) 3

conv2d (Conv2D) (None, None, 65, 32) 14464

layer_normalization (LayerNormalization) (None, None, 65, 32) 64

conv2d_1 (Conv2D) (None, None, 33, 64) 473152

layer_normalization_1 (LayerNormalization) (None, None, 33, 64) 128

reshape (Reshape) (None, None, 2112) 0

bidirectional (Bidirectional) (None, None, 256) 1721856

bidirectional_1 (Bidirectional) (None, None, 256) 296448

bidirectional_2 (Bidirectional) (None, None, 256) 296448

dense (Dense) (None, None, 32) 8224

===============================================================================

Total params: 2,810,787

Trainable params: 2,810,784

Non-trainable params: 3

_______________________________________________________________________________Define the CTC loss

def ctc_loss(y_true,y_pred):

batch_size=tf.shape(y_pred)[0]

pred_length=tf.shape(y_pred)[1]

true_length=tf.shape(y_true)[1]

pred_length=pred_length*tf.ones([batch_size,1],dtype=tf.int32)

true_length=true_length*tf.ones([batch_size,1],dtype=tf.int32)

return tf.keras.backend.ctc_batch_cost(y_true,y_pred,pred_length,true_length)Training the model

model.compile(

loss=ctc_loss,

optimizer=tf.keras.optimizers.Adam(learning_rate=LR),

)

model.load_weights('/content/drive/MyDrive/nlp/ctc_keras.h5')

history=model.fit(

train_dataset,

validation_data=val_dataset,

verbose=1,

epochs=N_EPOCHS

)Cheers!!!

Amit Tomar