Events

Events are notifications of change of state. Interested parties can subscribe and take action on the events. The event generator may not know what action is taken and may not receive no corresponding feedback that the event has been processed.

Event stream

An event stream is a continuous unbounded series of events from multiple sources. The start of the stream may have occurred before we started to process the stream. Events are ordered based on the time they are created.

Event Stream Processing Platform (ESPP)

An Event Stream Processing Platform (ESPP) is a highly scalable and durable system capable of continuously ingesting large amount of events per second from various sources. The data collected is available in milliseconds for intelligent applications that can react to events as they happen.

The ultimate goal of an ESP is to capture business events as they happen and react to them in real-time to deliver responsive and personalized customer experiences.

Data type which can be ingested

An ESPP can ingest data from various data sources such as website clickstreams, database event streams, financial transactions, social media feeds, server logs, and events coming from IoT devices

Processing of ingested data

The captured data must be made available to applications that know how to process them to gain real-time actionable insights.

Stream processing applications

To query an incoming stream of data to detect interesting patterns and take action on them. Real-time anomaly detection, complex event processing and infrastructure monitoring are few examples.

Streaming analytics

Perform computations on incoming streams of data to find metrics in real-time. Real-time counters, moving averages, sliding window operations are typical use cases of this. Applications in financial trading and infrastructure monitoring are good examples.

Real-time dashboards

These applications present incoming streams data on interactive real-time dashboards. They are suitable for monitoring and making informed decisions. Real-time sales, traffic monitoring, and incident reports are some examples.

Data pipelines and sinks

These applications move ingested data from the ESPP to multiple targets such as data lakes, data warehouses, and OLAP databases in real-time. Those systems are best suited for offline analytics and performing interactive queries. Often, the moved data is fed into batch analytics engines such as Apache Spark or Hadoop. The pipeline model can be used to chain consumers in sequence.

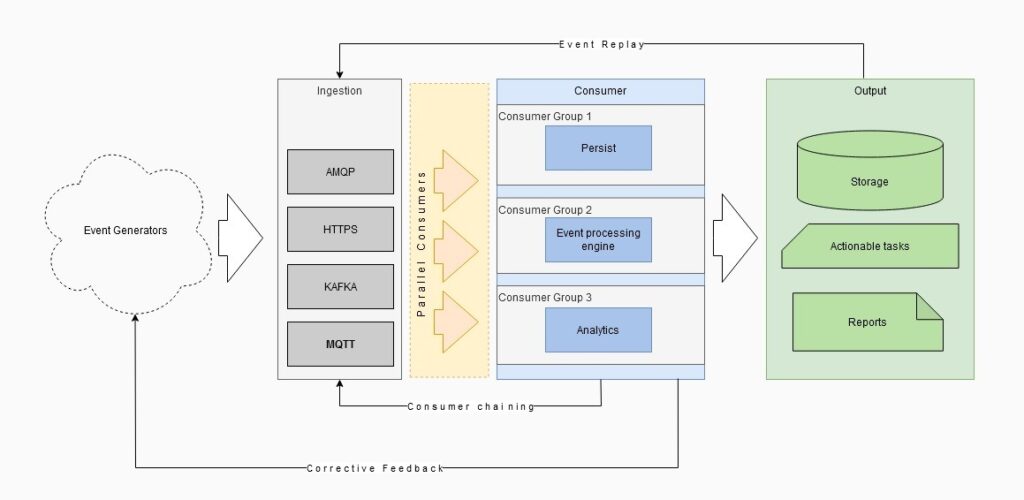

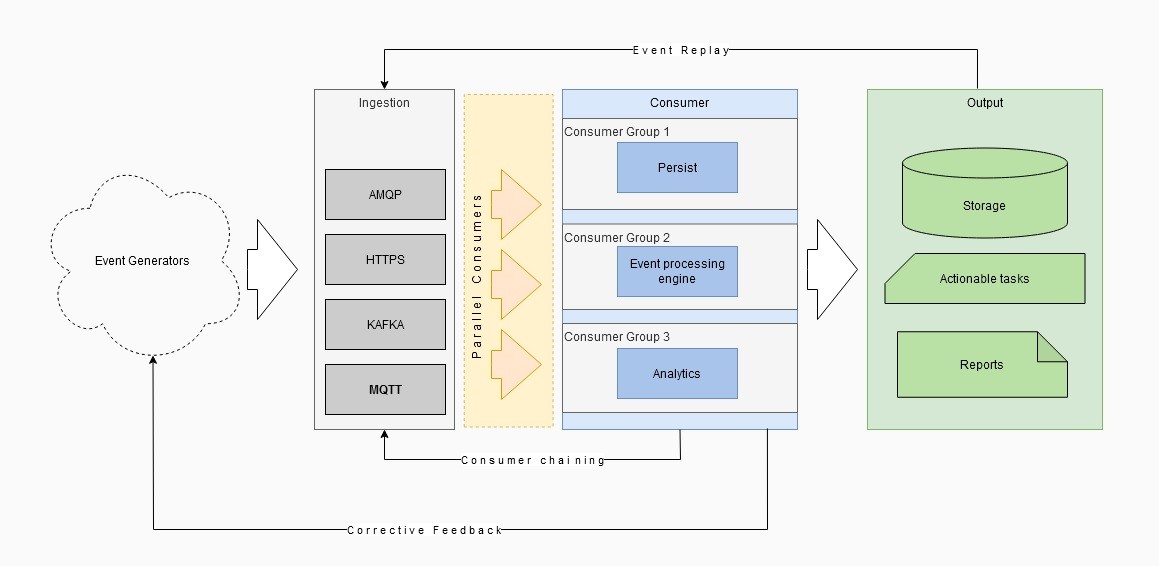

High-level architecture of an ESP

Ingestion layer

This layer is responsible for receiving events from event sources at very high throughput.

The ingestion layer often accepts event data over multiple transport protocols such as HTTPS, AMQP, MQTT, and Kafka. That enables to cater to a wide range of event sources apart from HTTPS.

Storage layer

The ingested data then handed over to the processing layer which can help persist the raw events in addition to processing them in parallel. The processing layer can also provide corrective actions back to event generators in self healing models.

Consumption layer

The stored data then consumed by consumer applications through this layer. Each consumer consumes one or more partitions from a topic through a logical group called a consumer group. That enables parallelized event consumption to improve the throughput at the consumer side.

Benefits of Event Stream Processing Platform

Loose coupling between producers and consumers

By putting an ESPP in between your data producers and consumers, you can make them loosely coupled. Producers are not aware of who’s going to consume the data that they are producing. Conversely, consumers are not aware of who produced the data they are consuming. For example, an IoT sensor emitting temperature readings might not know who’s going to process them.

This enables adding or removing components from/to the architecture without a significant change, enabling an agile business.

Provide scalable and fault-tolerant storage for real-time data

Databases are not the obvious choice to capture millions of data items per second. ESP is a distributed system purpose-built to ingest millions of data records per second. This data then stored in fault-tolerant distributed storage to keep them safe from data losses.

Shock absorber for incoming data

Sometimes the rate at which the data arrives is greater than the consumption rate. If downstream consumers are not performant enough to catch up with the production rate, they’ll crash.

In this case, the ESP acts as a buffer to absorb incoming data and help consumers to scale appropriately at a comfortable rate.

Available implementations

An ESPP should be scaled on-demand to cater to sudden spikes in incoming and outgoing event traffic. One single component does not make an ESPP. All messaging, processing and storage components should be able to support the fault tolerant and scalability of the solution.

Messaging layer

- AWS Kinesis

- Azure Event Hubs

- Apache Kafka

Storage layer

- Hadoop

- Casssandra

- Amazon S3

- Amazon Time series DB

Processing layer

- Apache Storm

- Drools fusion

- Azure stream analytics

- Esper

- Apache Flink